What finally motivated me to build a image classification mobile app?

Table of Contents

I recently took the Deep Learning Part 1 online course offered by Jeremy Howard. Having taken other machine learning MOOCs before, including courses from Andrew Ng, Sebastien Thrun, Geoffrey Hinton, I can say that Jeremy’s course is indeed a bit different from the others. I don’t know if this is because of the top-down approach he used to present the topic (he quoted research that shows it helps students learn and I agree), or his strong emphasis on the practical approach to AI, or it’s his self-deprecating humour that he enjoys using to poke fun at his management consulting job in his previous life. The correct answer is probably all of the above.

Now that I am eagerly waiting for Part 2 of his course, I have decided to heed his advice and build a (supposedly) small project to practice the techniques he taught in class using the fastai library. This is an effect that the previous courses I took didn’t have on me.

In this and future posts, I will talk about how I progress on the project and discuss topics that come up which I find interesting.

So what is fastai? Or fast.ai?

Jeremy started a company fast.ai to educate AI to the masses, with an interesting endgame:

Our goal at fast.ai is for there to be nothing to teach.

fast.ai offers free online courses, which are recordings from his SFU classes.

In 2017, he decided to switch the deep learning framework he was using in his course from Keras and TensorFlow to PyTorch. But he didn’t just stop there. Instead, he took the idea of Keras and built an opinionated library, fastai, on top of PyTorch. He added helpful tools and incorporated the latest best practices that made applying AI to solving common problems a breeze.

PyTorch, a rewrite of Torch (a defunct ML library based on Lua), got its roots from the ML research community and has been getting a lot of attention in the general ML community recently. I think doing the fasta.ai course would be the perfect way for me to start using it.

Having said that, a key point that Jeremy made which resonates well with me is that we should not be focusing on learning the actual framework (though this is necessary), as there will always be newer and better frameworks being developed. Instead, we should be focusing our attention on learning the underlying AI concepts and techniques, which will allow us to switch framework if needed.

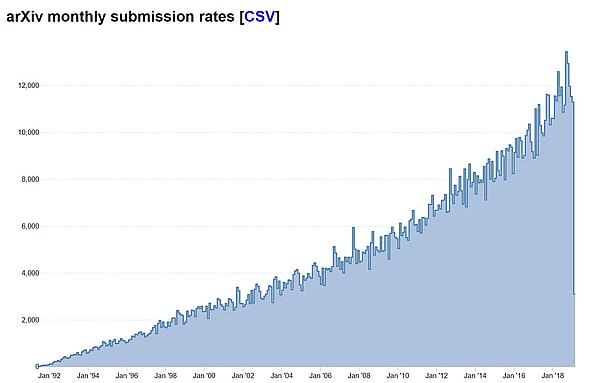

Keeping up with AI research is hard enough (source)

Why Google Lens

Computer vision has always been a popular branch of AI for anyone new to the field as the basic concepts can be understood easily when presented with vivid visuals. MNIST is basically the Hello World for ML. While the recent advances in AI have made writing a simple image classifier easy (e.g. classifying digits, dogs vs cats) and requiring only a few lines of code, it gets much harder if we want to classify an object from a much larger number of possible labels.

Building this should be much quicker

Using Google Lens as an example, it is very good at recognizing objects from a few pre-defined categories, such as landmarks, plants, and dog breeds. But if I want to identify an object that is not in these categories, say the species of a butterfly, or the name of a Van Gogh painting, then it wouldn’t work. Google Lens will suggest images which contain objects that look similar to my photo, but I would still need to click on that image and search the page for the information that I’m looking for.

Now if I had a trained model for identifying butterfly species and another model for identifying Van Gogh paints, and I could tell Google Lens beforehand which model to use, then the aforementioned problem might be solved.

This sounds to me like a simple and fun project to work on, and it might even be useful at times (at least until Google Lens, or Bing Visual Search, can start doing this).

Let’s try it.